OpenAI and Artificial General Intelligence The Wild Quest for a Thinking Machine

Artificial general intelligence examples are popping up more frequently as researchers inch closer to building truly human-like AI. Artificial General Intelligence (AGI) sounds like the tech industry’s version of the Holy Grail. Everyone wants it, no one quite knows what it looks like, and the path to get there is filled with theories, algorithms, and a surprising number of ethical dilemmas. At the center of this quest? OpenAI, whose efforts in OpenAI AGI development are setting the pace for innovation worldwide.

Yes, the same organization behind GPT, DALL·E, and the AI that helped you write your last email. OpenAI isn’t just playing around with smart chatbots; they’re aiming for AGI. But what does that even mean?

Let’s take a long, honest, and occasionally ridiculous journey through OpenAI’s pursuit of AGI, what it involves, what it risks, and why you should care (even if your current biggest concern is whether your smart fridge is judging your snack habits).

What Even Is AGI?

AGI stands for Artificial General Intelligence. Not to be confused with your phone’s auto-correct that keeps changing “ducking” to something very not family-friendly.

AGI means an AI that can learn and understand anything a human can reason, solve new problems, adapt to unfamiliar situations, and maybe even make a sarcastic joke or two.

It’s not just book-smart. It’s street-smart, emotionally aware, and capable of moving between topics and skills like a caffeinated genius with a podcast addiction.

From GPT to AGI: OpenAI’s Roadmap

OpenAI started with big dreams and bigger questions. With models like GPT (Generative Pre-trained Transformer), they’ve been scaling their way toward AGI.

Every GPT iteration, GPT-2, GPT-3, GPT-4, and the latest o(mega)-versions have shown more fluency, reasoning, and creativity. But does that mean we’re close to AGI?

Not quite. These models are narrow; they’re brilliant in language, but don’t understand the world like humans do. Think of GPTs as really good improv actors. They sound confident, but they’re just guessing one word at a time.

The Training Playground: How OpenAI Trains Smart AIs

How do you teach a machine to think? You start with a massive dataset t text from books, websites, forums (yes, probably including your old embarrassing tweets).

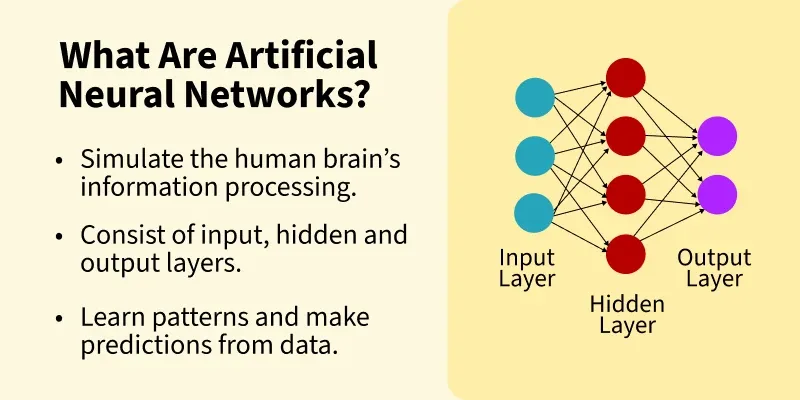

Then, you build neural networks with billions (sometimes trillions) of parameters, think of them as dials that help AI predict, analyze, and respond in surprisingly human-like ways.

And finally, you use reinforcement learning (basically digital trial-and-error) to help it improve.

Safety First, Right?

OpenAI doesn’t just want to build AGI. They want to make sure it doesn’t, you know, end humanity.

They’ve published safety policies, created red-teaming exercises, and even formed governance structures to guide ethical development. It’s like raising a super-smart kid and constantly worrying they’ll outgrow you and hack the bank.

Alignment: Teaching AGI to Want What We Want

This is where it gets philosophical. It’s not enough for AGI to be smart; it needs to understand human values. And that’s hard.

How do you make sure your AGI doesn’t think the best way to “save the planet” is to remove humans? Alignment research tries to bridge that gap.

OpenAI’s Team: Brainiacs, Dreamers, and Doom-Preventers

OpenAI isn’t a lone genius in a garage. It’s a team of researchers, engineers, ethicists, and… meme lords? Possibly.

Their goal is to make AGI that benefits all of humanity, not just the rich, not just the tech bros, but everyone, from kindergarteners to grandmas.

Milestones That Make You Say “Whoa”

OpenAI’s systems have aced high-level exams, generated full codebases, composed poetry, and even debated humans.

Each milestone feels like sci-fi creeping into reality. One day it’s autocomplete, the next it’s AI writing your wedding vows.

| Year | Milestone | What It Did |

|---|---|---|

| 2018 | GPT-1 | First major language model |

| 2020 | GPT-3 | Natural conversation, code, translation, etc. |

| 2022 | Codex & DALL·E 2 | Code generation and image synthesis |

| 2023 | GPT-4 | Multimodal input/output and deeper reasoning |

| 2024 | ChatGPT with Memory + Tools | Personalized, dynamic interactions and web actions |

Open Source or Open (AI)-pocalypse?

There’s an ongoing debate should whether AGI research should be open to everyone or kept secret to avoid misuse.

OpenAI started as a nonprofit, but as things got more powerful (and expensive), it pivoted to a “capped-profit” model.

Critics argue that transparency is shrinking. OpenAI insists it’s about safety and responsibility. And maybe a bit of not letting rogue billionaires build Skynet in their basement.

Real-World AGI Impacts: What Happens If They Succeed?

If OpenAI builds true AGI, everything could change:

- Jobs could shift massively (or disappear).

- Healthcare could improve dramatically.

- Education might become personalized at scale.

- Government decisions could be modeled by machine logic.

Sounds awesome… and terrifying. But don’t worry, we’re still in the “figuring-it-out” phase.

The Final Frontier or the Beginning?

OpenAI isn’t just building a product; it’s launching us into a new era. AGI could be humanity’s greatest tool or its weirdest existential crisis.

And while we joke about AI stealing jobs or writing cheesy romance novels, the truth is it’s serious business.

But hey, at least we can say we were there when it all started. Watching robots learn. Helping shape what comes next. And occasionally yelling at our voice assistant when it plays Nickelback unprompted.

TL;DR: Why Should You Care?

Because AGI isn’t science fiction anymore. OpenAI is making real strides. The stuff they’re building now will shape your tomorrow.

So read up. Stay curious. Question everything. And maybe treat your AI a little nicer. You never know who’s keeping score.

FAQs about OpenAI and AGI

What is Artificial General Intelligence (AGI)?

AGI refers to a type of AI that can understand, learn, and apply intelligence across a wide range of tasks, similar to how a human can. It’s not specialized like today’s AI tools; it’s meant to be more adaptable, creative, and self-improving.

Is OpenAI close to achieving AGI?

Not yet, but they’re on the path. OpenAI’s current models, like GPT-4 and GPT-4o, are considered “narrow AI” because they excel at specific tasks. AGI would require these systems to reason, generalize, and act autonomously across many domains.

How will AGI change the world?

If used ethically, AGI could revolutionize healthcare, science, education, and more. But it also comes with risks like job displacement, privacy concerns, or misuse in autonomous weapons.

What makes OpenAI different from other AI companies?

OpenAI is deeply focused on safety, long-term impacts, and cooperative alignment. They’ve also made significant advances in open research, like publishing papers and making models (somewhat) publicly available.

Could AGI become dangerous?

Yes. That’s why OpenAI is working with governments, academics, and industry leaders to ensure that AGI development follows strict safety protocols and benefits all of humanity.

Will AGI take our jobs?

Possibly some of them. But ideally, AGI would augment human work handling repetitive or risky tasks so humans can focus on creativity, strategy, and empathy-driven roles.

Can AGI have emotions or consciousness?

Not in the way humans do. AGI might simulate emotions for communication, but true consciousness or feelings are still a philosophical and technical mystery.

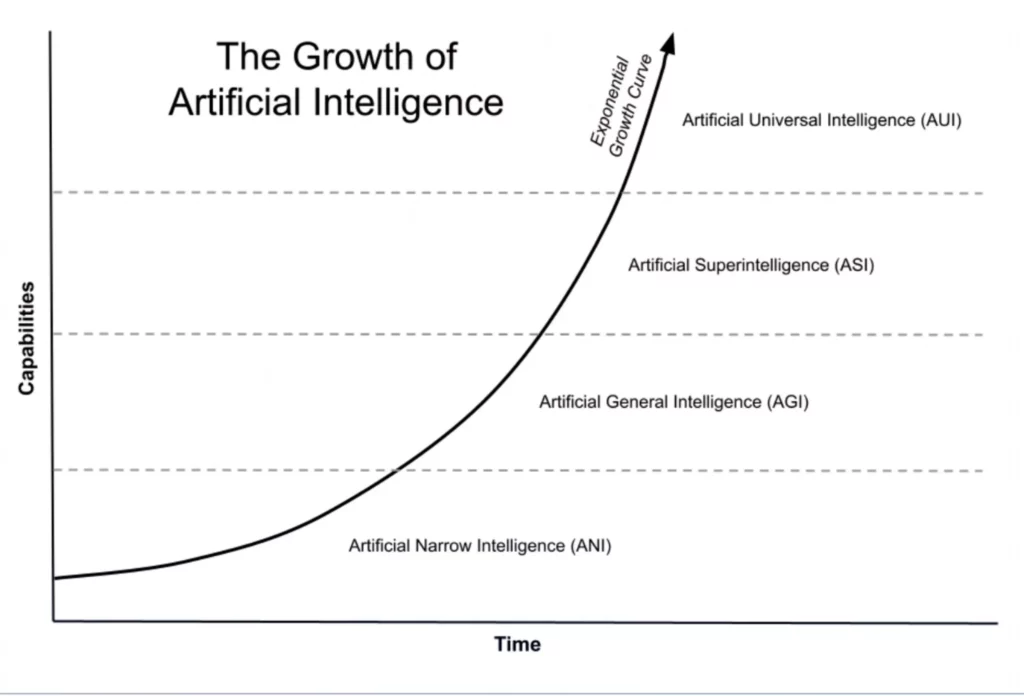

Is AGI the same as superintelligence?

No. AGI is a stepping stone. Superintelligence would surpass the best human minds in every domain. That’s the big scary thing people talk about in sci-fi. AGI might get us there… eventually.

How is OpenAI funded?

Originally a nonprofit, OpenAI has since created a “capped-profit” model. It receives funding from investors (like Microsoft) while ensuring that returns are limited to keep the focus on safety.

How can I stay updated on AGI development?

Follow OpenAI’s blog, major AI conferences, and trusted tech journalism sources. And if you’re really into it, start reading research papers. There’s a whole world of wild ideas out there!